Yesterday I had to miss the debate on meta-analyses on #rED18 but I did read the post by Robert Coe.

It’s true there has been quite a stir about Hattie and meta-analyses lately, and to me there are different aspects to the discussion.

I did notice that when effect sizes are shown in a different way, people can spot the complexity that’s often being obscured.

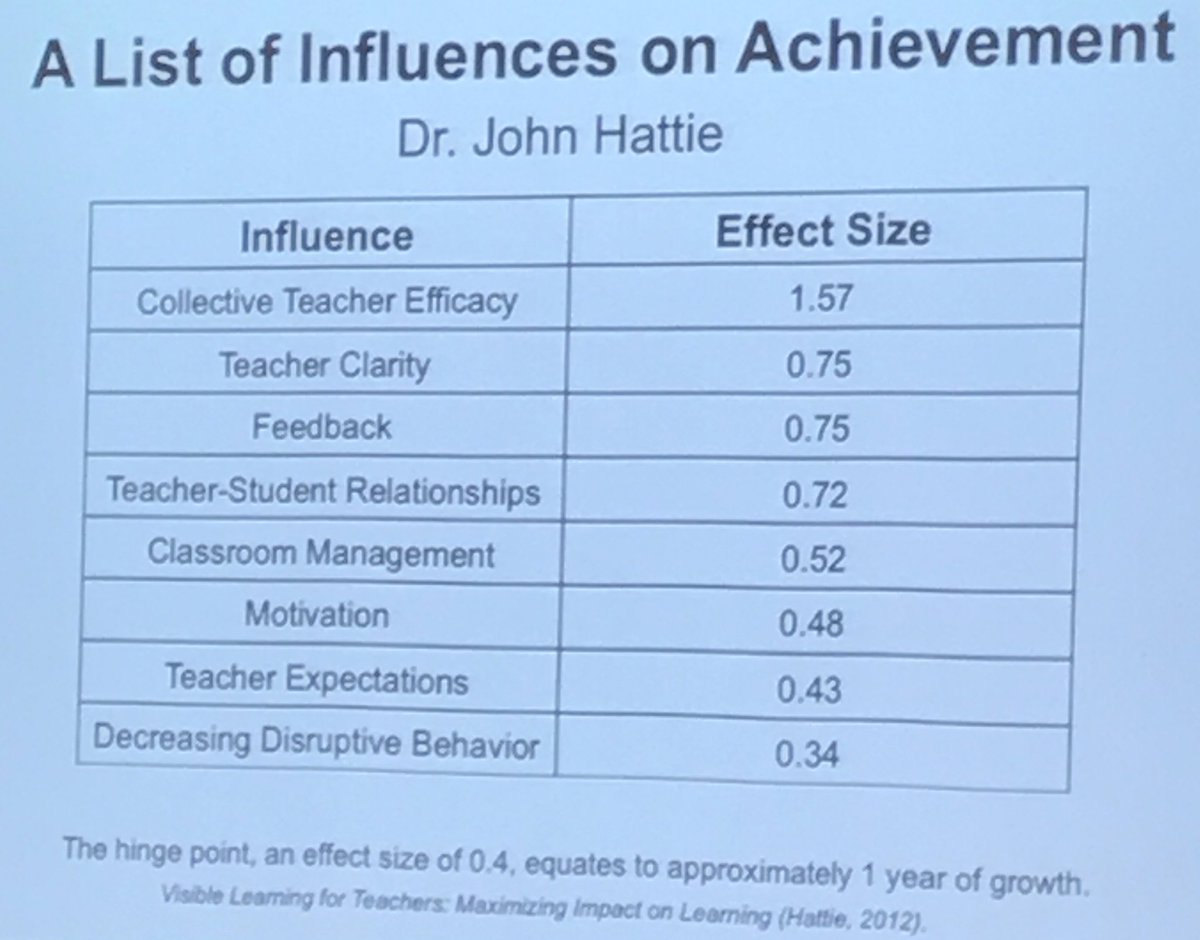

Compare the way Hattie notes effect sizes in his infamous lists of effects, e.g.:

And compare that with this graph, taken from Dietrichson et al., 2017.:

In this second example you can see the range of effects that are hidden behind the average effect size. It’s still an abstraction of a more complex reality, but it invites people who are interested to check what makes the difference between effect sizes noted for small-group instruction so big, or it shows that while coaching and mentoring students can have a positive effect, there is also a danger of the opposite.

Reference:

- Dietrichson, J., Bøg, M., Filges, T., & Klint Jørgensen, A. M. (2017). Academic interventions for elementary and middle school students with low socioeconomic status: A systematic review and meta-analysis. Review of Educational Research, 87(2), 243-282.

Reblogged this on The Echo Chamber.

While researchers should always include indicators of the confidence they have in point estimates, this misses the point that comparing effect sizes in this way has been discredited as a way of comparing the effectiveness of intervention types. The assumption that tutoring uses the same distribution of samples, comparison treatments and outcome tests as computer assisted instruction does not hold. See the recent papers by Simpson such as https://goo.gl/Txnk23 or listen to Ollie Lovell’s education podcast https://goo.gl/jFLaeN

[…] tutoring can be a very effective way to deal with this situation, but sometimes quite costly. But Dietrichson et al also showed that more time with their own teacher can help to close learning gaps. But what do […]

[…] There is a new systematic review study on a topic that is highly relevant to the present situation. High‐quality evidence shows that, on average, school‐based interventions aimed at students who are experiencing, or at risk of, academic difficulties, do improve reading and mathematics outcomes in the short term. This is in line with a previous meta-analysis by Dietrichson et al: […]